Preface

I am not a doctor, neuroscientist, etc. What I am is a businessperson and student of history (and human nature) with regards to disruptive technologies and the reaction of the business and finance leaders of their time.

EXAMPLE: The invention of electricity transmission and use was not going to spread to the masses until the invention of the electric meter. Until that point there was no way to charge people for usage, which meant electricity was not going to proliferate. There are stories that once J.P. Morgan (General Electric) realized the funding he was providing to Nikola Tesla for work that ended up being the Wardenclyffe Tower on Long Island, NY, for wireless transmission of electricity, he “pulled the plug”. Morgan realized that there was no way to track and monetize wireless power transmission, so he killed the project. (Ironically, wireless transmission of electricity would be required to meet the electrification goals laid out by some governments today.) The lesson is that business leaders in the end can say a lot of things, but they’re only motivated by bottom line margins. Improvement in the state of the human condition is only a motivation they claim when there is profit included. There is a reason Tesla died poor and J.P. Morgan’s companies survive to this day.

As businesses leaders run headlong after AI solutions for everything in every function, their motivations are clear: 1st COST REDUCTION and 2nd (distantly) incremental revenue/growth. The discussions about growth are wrapped in obtuse language and strategies. The first growth side challenge is that most are going down the same path. At least for the B2B people, AI universally applied raises the floor for everyone similarly. AI can’t make non-word class assets word class if everyone is using AI on their world class and non-world class assets. What changes are the relative performance of metric numbers for all classes of assets. In a prior article, I estimated the impacts on white collar work by generations. That estimate is provided at the end. Feel free to argue your position on impact.

The issue of cognitive decline from AI is a topic that isn’t new. The reality is that there are past technological adoption examples where mass degradation of longstanding human capabilities has already happened. Henry Kissinger, Eric Schmidt (former Google CEO), and Daniel Huttenlocher published, “The Age of AI: And Our Human Future”, in 2021. The book discusses the issues of altering humans’ reality through AI and masses of people becoming cognitively impaired by AI. I will be resourcing other information for this article, not their book. However, there may likely be parallels in assessment as many independent assessments of the situation will likely end up with the same conclusions.

The Brain

As stated, I’m not a doctor or neuroscientist. However, I’ve been intrigued over the years by documentaries about people with brain function anomalies, post brain injury changes (positive and negative), memory oddities of Alzheimer’s patients or brain injured, and the generally observable changes in overall public mental processing capabilities (positive and negative). Some of this interest has been self-discovery as understanding the consequences of being ambidextrous and the other areas that affects.

Even those of us with a public-school education know that certain sections of the brain control certain functions: autonomous functions, vision, hearing, etc. We also know that many people split analytical and artistic functions by different halves of the brain. (Ambidextrous people can have a difference in this regard) What I’ve learned through observation and these documentaries is the incredible parsing within the brain of certain higher brain functions and areas of memory storage. Some discussion of the brain is necessary to forecast the potential impact of AI on cognition.

More than One Memory Bucket

While it would seem logical that visual memory, like vision itself, might be separated from audio memory as sound processing is in the brain. If you research visual memory alone, you can find different articles and stories where humans have either been born deficient in a particular type of vision memory or have become impaired due to injury. If you ask an AI search about visual memory types, you might get a list like the following (source Brave Browser AI):

· Iconic Memory: Also known as visual sensory memory, it is a brief (milliseconds) and fleeting storage of visual information, such as images or patterns, in the brain. This type of memory is responsible for our ability to recognize and recall visual stimuli, like a flash of light or a brief glance at an image.

· Echoic Memory: This type of memory is not explicitly mentioned in the search results as a distinct visual memory type. However, echoic memory refers to auditory sensory memory, which is responsible for the brief (2-4 seconds) storage of auditory information, such as sounds or voices. There is no direct equivalent in visual memory, but some researchers suggest that visual working memory may have a similar short-term storage component.

· Visual Short-Term Memory (STM): This type of memory stores visual information for up to 30 seconds, allowing us to use it in ongoing cognitive tasks. Visual STM representations are longer-lasting, more abstract, and more durable than iconic memory representations.

· Visual Long-Term Memory: This type of memory has no clear capacity limit and can store vast amounts of visual information, such as images, scenes, and events, for extended periods. Research has shown that visual long-term memory can retain information for years, with recognition rates remaining high even after extended periods of time.

· Visual Working Memory (WM): This type of memory encompasses both visual STM and visual WM functions. Visual WM is responsible for the storage and manipulation of visual information held in memory, allowing us to mentally manipulate and transform visual information.

Okay… This list is five types, but I’ve also seen seven listed. What is not here is a specific definition for the different types of things a human might see: icons, symbols, faces, objects, colors, etc. and whether there is a distinction in the brain for where that information goes.

Object agnosia is the inability or loss of memory related to inanimate objects. In a documentary I watched about ten years ago, one of the subjects was a man who had an accident that resulted in a complete loss of his ability to identify inanimate objects. He could not discern a banana from a ball or can, etc. However, his facial recognition memory was completely intact with an ability to recognize certain faces (e.g. Albert Einstein) even if presented in an abstract ink blot style. The metaphorical epiphany I took at the time, which will have bearing on the AI discussion, was that, like a computer, our memory storage by type is not interchangeable at least as a default. We hear about stroke patients, relearning to speak, but that is the processing of memory to speech, not the memories themselves. Maybe reallocating memory can work comparably to processing, but what if both are damaged or atrophied?

Neuroplasticity in the simplest terms is the ability of the brain to “remap” itself for both processing and memory. In recent years there have been more studies on this capability and multiple tools and games/apps focused on increasing it. As mentioned, it was already known that stroke and other brain injured people have been able to relearn things as new areas of the brain to take over processes that were mapped to a section of the brain now damaged. The consistent commentary about causing or initiating neuroplastic changes in a person’s brain are external influencing factors including proactive self-motivated exercise. Remapping of the brain functions does not spontaneously happen without external motivating factors. In the context of this paper, neuroplasticity has no bearing as the adoption of AI involves the willful outsourcing of certain brain functions.

Use It or Lose It

Any of us that work out at the gym know the consequences of missing for extended periods of time and going back, particularly as we get older. Fortunately, enough passes in front of a mirror will typically provide sufficient motivation to endure those consequences and get back to the gym. Of course, O-L-D is undefeated, and all forms of physicality degrade over time regardless of our dedication. This is also true for our brains and mental skills. The difference is that there are no mirrors, only much more subtle hints that O-L-D is affecting us cognitively. My observation and opinion are that our brain related skills are far more perishable and harder to recapture after they’ve degraded due to inaction than physical skills.

What is important is that different memory and mental processing functions (even higher-level functions) are segregated within the brain. If you no longer use something because it’s been “outsourced” to a 3rd party that does it as well or better than you, those parts of your brain don’t take on other tasks! (examples coming)

Examples of Human Skills and Cognitive Loss Due to Technology

Navigation

One of my favorite examples is the consequences of widespread GPS navigation availability. Those of us at a certain age could, even as young children, direct someone to our home via landmarks as they drove us home. Navigation was a survival skill essential to the preservation and development of humans. As we started as hunter-gatherers, being able to remember the safe and resource abundant routes were essential to survival. Whole civilizations were wiped out by cataclysmic events that rendered their existing navigational knowledge useless. Today, many people, even older generations, have stopped making efforts to store details of a trip that we might make periodically but not daily. Even after dozens of visits over a period of a few years, many couldn’t get to a location without GPS support. Even the general “sense of direction” skills and capabilities atrophy in the “real world”. Oddly enough, mapping and directional skills in the virtual world may be improved via immersive 3d gaming.

While GPS is infinitely more convenient, using GPS doesn’t result in any net gain of capability for human beings. The landmark and directional processes and memory are not freed to do something of higher order or priority. They’re just not used anymore.

Problem Solving

Primary education and many parts of university education have not been strong on problem solving or critical thinking even before the digital age. Most educational disciplines are about memorization and answer finding. However, disciplines requiring application of base principles to any variety and complexity of related problems require an understanding of the principles. These include higher complexity mathematics, sciences, engineering, statistics, etc. Of course, school is an exercise in demonstrating understanding of a subject by primarily producing correct answers. Not surprisingly, the disciplines listed above routinely require the “showing of work” to obtain an answer. In those disciplines, a student can produce a wrong result and still earn most of the available points for a problem by demonstrating they knew how to work it even though the answer was ultimately wrong.

The takeaway is that learning to work problems in those disciplines is a higher priority than finding the answer. Eventually you need to be able to do both, but understanding the principles comes first.

Enter the internet…

Prior to the internet, preparing for tests in those process-based disciplines would involve homework problems, practice tests, example problems in the text, quizzes, etc. In some instances, the final answer might be provided allowing the student to work on the problem to the end to compare their result with the provided answer. Nothing was more frustrating than working a problem multiple times and not getting the same answer as the “back of the book” where we found out later the book answers weren’t always correct. Classroom Instruction might show a student the method for expanding a process from two elements to three. The understanding being that three to four and four to five elements followed a similar process. In the past, students would have to learn to expand processes to address new and possibly more complex problems. However, the internet has allowed students to find exact examples of every problem without learning to extrapolate base process. They simply replace the numbers for their problem into the identical framework of the internet example. What we called “plug and chug” solutions.

Unless someone recognizes that a student is “finding answers”, not “working problems”, that student is likely to fail not due to a talent deficiency but the availability of easy answers. Trying to memorize someone else’s work is not nearly as effective as learning to do the work yourself.

Historic Perspective on Disruptive Technology

It’s a bit entertaining to watch people on LinkedIn and elsewhere trying to paint the picture of how AI will be used, particularly when the LinkedIn author is looking for a rationale for how their specific profession will be augmented versus ended because of AI. It’s a natural human response, but it’s unfortunately not grounded in history. The most pervasive argument for this point of view is based on the current limitations of AI as if what is happening today is perpetual. Forecasting with a bias that underestimates technology growth almost always turns out poorly for those who subscribe and act on that forecast.

December 17th, 1903, to July 20th, 1969 (65 Years 5 Months)

In a single lifetime, human beings went from the first heavier than air flight to walking on the moon.

July 20th, 1969, to September 29th, 2024 (54 Years 2 Months)

In less than a lifetime, the computing power that enabled the moon landing is now dwarfed by the computer processing available to almost every human on the planet. It would take 120 million of the Apollo 11 guidance computers to equal the computing power of one iPhone 16.

December 1989, to January 2012 (22 Years 1 Month)

In one generational span, digital imaging technology displaced 100-year-old standing technology and caused the bankruptcy of one of the most iconic US companies in history while effectively ending its other than for special use (chemical-based photography). The Fujifilm DS-X digital camera is the first publicly marketed and sold digital camera in Japan. (There were far earlier prototypes including one by Kodak invented in 1975.)

1973 to 2019 (46 Years)

In less than a lifetime, technology advances in computer generated imaging went from Harold Cohen’s AI program AARON, which produced artistic impressions of humans, to Nvidia’s Generative Adversarial Network producing photorealistic artificial images. Today we have photorealistic images of influencer/models in exotic places that are completely artificial but indistinguishable from real photos. Video is rapidly improving and will reach parity with actual live videos in no more than a year or two.

The historical technology examples would seem to indicate that the appropriate timescale for disruptive technology would be measured in generations (~20-year spans). An earlier AI impact exercise I tried (at the end of this article) used generations as the time unit, but for AI a generational time span may be too conservative. The AI experts who have been predicting certain milestones over the past 20 years have had to accelerate their forecast timelines from generation, to decades, and now to years. The significant challenge in forecasting the future of AI capability is understanding what happens when AI starts directing its own development/improvement/augmentation.

White Collar AI Cognitive Decline Risk

There is a significant difference in using your mind to do something versus using it to ask for something. Right now, there are a lot of people thinking that there is going to be a future as metaphorical “AI travel agents”. Like the travel agent/office workers of the past, the need for proficient “askers” will be eliminated as the technology advances. Worse, as a former doer becomes an asker their abilities to recognize issues with the output of AI will diminish. AI, particularly in these early stages, will be wrong, produce poor output, or produce fraudulent output. However, it’s adoption will continue, and its nascent failures will be overlooked or blamed on poor “AI travel agents”. Understanding that “asking”, even if it’s currently technical in nature, isn’t nearly as valuable or distinguishing from a talent perspective as being able to do something. A few AI myths to consider in terms of white-collar skill decline:

1. AI Makes Me More Productive – Outsourcing what you do to AI doesn’t make you more productive, it makes the organization more productive because it can get the same amount of work out of fewer of you than before. Outsourcing your thinking to AI doesn’t make you analyze, code, etc. faster or better. It weakens those skills over time.

2. AI Frees Me Up to Do More Value-Added Things – Are those value-added things already part of your job? Are those things that your supervision does today? This is a direct extension of the first myth to other organizational tasks. As with your direct job, the AI will displace someone somewhere.

3. AI Will Increase My Higher-Level Skills – The assumption here is that a tool that is designed to think for you will be no more than “arms and legs”. Outsourcing thinking doesn’t add to your skills, it detracts from them. What is more likely is that it will enhance your ability to conceptualize things to ask for in the short term but diminish your abilities to make/do things.

4. My Company Sees AI as a Supplement or Enhancer of Human Talent – While there will be some examples where this is true, in most cases the goal for AI (stated or not) will be to replace humans when AI eliminates the value of human involvement. Once AI’s abilities to translate business need to output improve beyond the “AI travel agents”, every human job linked to data-based output will be at risk.

a. All Companies See AI the Same Way – For every venture capital or tech start-up where AI may be more supplemental, there are thousands of businesses in mature markets that are looking continuously for cost advantages. This behavior drove outsourcing, offshoring, etc. and AI will be seen in the same light. Even with AI promoted as a growth tool, costs and cost savings are always easier to estimate and forecast than growth.

What distinguishes white collar roles from blue collar roles:

· Work Setting

o White-collar jobs: Office-based or indoor based (e.g. laboratory, clinic), some roles can be executed at least in part remotely, for those in the field work it’s typically things like engineering or blue-collar supervision

o Blue-collar jobs: In the field roles at factories, warehouses, construction sites, outdoors, etc.

· Type of Labor

o White-collar jobs: Primarily involve intellectual labor, requiring education, training, and specialized skills.

o Blue-collar jobs: Manual labor or physical effort, specialized skills developed through training or apprenticeships (trades)

· Education and Training

o White-collar jobs: Typically require formal higher education, such as a bachelor’s degree or higher

o Blue-collar jobs: No education, on-the-job training, apprenticeships, vocational education

· Pay Structure

o White-collar jobs: Typically, salaried

o Blue-collar jobs: Typically, hourly wage paid with overtime and other considerations

In comparison, white and blue-collar roles, work setting and type of labor have been highlighted. This is because one of the most contentious issues in the past year has been the companies that have given return-to-work notices for employees that had been allowed to work remote or hired on as a remote employee during and after COVID. While the return-to-work edicts clearly have an element of reduction in force disguised as an office policy change, intellectual labor roles that can be done remotely (i.e. direct interpersonal interaction isn’t deemed essential to the role) are prime targets for AI substitution. The interesting piece will be that it’s the separation of these professionals from the greater organization, except virtually, that will make it difficult for these professionals to recognize the AI risk to their role and skills. For these professionals, AI adoption and outsourcing of tasks risks accelerating reduced organizational effectiveness or deftness.

In the world of complex intellectual processes and development projects, the same problems of outsourcing and moving from “doing to asking” will expand beyond the base level of task execution to project management/guidance. At the highest level, all companies (at least in theory) develop strategies to meet the financial expectations of the company’s owners. As AI at the lower levels of project execution expands the number of component task options to a level beyond a human project manager’s capability to review and choose, the project managers will have to use AI to evaluate the available scenarios. Moreover, the breadth of and complexity of the metrics that might be employed to evaluate millions of strategic combinations internally by an AI will ultimately have to be digested to the simpler metrics humans can absorb. While the AI will produce financial estimates/outcomes of various scenario choices, the details under which those estimates were developed for assessment will be the stereotypical “black box” to humans. The AI says option A produces ten and option B produces six, and the humans pick A. At that point, the project manager is no longer the project manager, just the liaison between the AI project manager and senior management.

“Copium” – Looking for Plausible Lies to Comfort Oneself

An argument could be made that the most damaging lies in life are the ones people tell themselves. It’s difficult in topics such as the impact of AI on cognitive skills, job prospects, etc. to remove yourself from the discussion to be as objective as possible. Ironically, even when there is significant historical precedence people will choose to believe this time will be different or at least it will be different for them. Recognizing that the “copium” you can easily find on LinkedIn today is a self-preserving lie, can help a person work to be more objective in their own assessment of AI and their personal action plan.

· “It will be a long time before AI significantly replaces humans in most roles.” – The first problem with this cope is defining “how long is a long time?”. As previously covered, disruptive technology does take some time, but AI impact is likely measured in generations (~20 years), not lifetimes. If you’re 60-years old, arguably AI is not a major professional concern for you, but if you’re 40-years old or younger, certain professions may be decimated by AI before you reach your 60’s. An estimate I made about a year ago about professions and impact (at the end of this article) was estimated in the context of generations. At this point, it may be a bit conservative. In the time since that article was written, AI experts development time assessments on full AGI capability compressed by years.

· “AI doesn’t work well enough yet to make a major impact.” – This is the assumption that AI must be equivalent to humans in every aspect before it would replace humans. This doesn’t even align with humans replacing humans. A generation ago, the tech areas were hit with the great self-fulfilling lie: “There aren’t enough technical people, so we need to allow the importation of H1-B visa applicants to make up the difference.” The claims of workers shortages were at odds with the economics of the pay rates for US born technical people which were not growing with inflation. This economic incongruence with the “message” of worker shortages fell on deaf ears. The state of California, energy companies, Walt Disney, etc. were all in the press at the time for having forced US employees to train their H1-B replacements or lose any severance. The resulting replacements were not better, and service suffered, but the companies were able to destroy wage rates. This removed incentives for future US professionals to pursue certain areas of tech fulfilling the prophecy of talent shortages that has come up again in recent weeks. Capable, trained, and proven humans were replaced with lower-quality output from lower cost alternative human sources. AI will be the same in certain professions almost immediately. What companies have learned is that customers will eventually normalize to the lower levels of competence and service when there are no other options.

· “There is too much risk to companies to push things to AI without the human safety net.” – While there are always exceptions, most companies and company leaders are herd animals. As a few companies move, so will the herd. This will be facilitated by the large consultant firms, which are also looking to eliminate large numbers of their human assets for AI solutions. The corresponding and widespread nature of most company “leadership” is they don’t lead as much as look for patsies to assign blame for decisions and strategies that fail. One of the jokes in larger companies is the consultant firm revolving door where BCG is on point until blame needs to be assigned to them allowing Bain to enter, then IBM, then McKinsey until at some point the wheel spins back around to BCG. AI will be an even a better “blame magnet”. To be clear, there are the arrogant know-it-alls that make a lot of decisions in companies, but it would be shocking for many to realize how many company leaders are more like politicians only worried about keeping their job and compensation.

· “AI Can’t Replace the human, touch, empathy, sense of the room, etc.” – This one has come up recently more and more. People making these comments are making three significant assumptions: (1) the value of human interaction will retain some significant value for companies, (2) that AI will not eventually be able to replace humans in “human interaction”, and (3) humans will be the primary consideration for resource deployment or sales focus in the future. These require a little more detail.

o Humans Interaction Value – Automated phone systems, chat bots, etc. are already heavily prevalent. Yes, most people would prefer a person in support, but as stated earlier, people can be conditioned to accept less. The bigger problem are the increasing numbers of people, particularly young men, that are increasingly isolated from the world. For many of them human interaction is more often a source of pain and derision. AI is already filling that role in rapidly increasing numbers.

o Humans Prefer Humans – Like indicated above, that situation is changing as people become less civil, more superficial, and motivated by public adulation more than accomplishment or real meaningful interaction.

o Humans Are the Priority for Businesses to Prosper – More on this in the last point (AI can’t exist without humans), but humans already displace other humans as market priorities all the time. Animals have never displaced humans, but AI is something different. When the point is reached that there are so few humans that understand how things work, who exactly will be the priority?

· “AI will be like other technology longer on promise than deliver (an AI bubble)” – This “copium” is an extrapolation of prior technological cycles that have superficial similarities, but at the core are dramatically different. While most people think that “tech bubbles” are something started in the 90’s, overexuberant promise of even older technologies were just as prevalent in their day: electricity was so magical as to be a solution for everything, radiation and radioactive materials were similar, what was believed about the future value of radio communication were as optimistic as the internet was later, and so on. All these technologies did transform the world, but they weren’t as transformative as some speculated at the time. Similarly, the internet, computers, etc. have transformed the world and haven’t, at least yet, reached the most optimistic outlook on their value. Why? The preeminence of human intellect has not been challenged by those previous technologies. In 1997, IBM’s Deep Blue defeated Chess Grandmaster and champion for the first time. While an interesting moment in time, significant resources and customized training (specifically about Kasparov) were employed to outperform a single human in a single test of intellect or mental processing power. A novelty for sure at the time. The AI of today isn’t about direct programming. It’s about learning models. While humans are the ones currently driving the updates and revisions to these learning models, it’s only a matter of time before AI will achieve what only humans have been able to do up till now: self-improve their knowledge and thinking processes. As discussed earlier, neuroplasticity tools and games have been developed to allow humans to improve their mental processing. Similar tools will be part of AI but operate on a far larger intellectual scale.

· “AI can’t exist without humans.” – Most humans can’t exist without other humans either. The issue in the white collar and business spaces is who or what drive priority for resource allocation in the future. Most of human existence has been dominated by feudal/monarchial systems where a few dominated other humans in the pursuit, collection, preservation, and defense of resources. It’s only in relatively recent history where a broader distribution of control and access to resources has existed (e.g. middle class). Recent history produced significant leaps in human technological development as more human intellects had opportunities to develop and create. However, the feudal system that dominated most of human history and what came later were driven by the preeminence of human intellect in identifying, finding, collecting, and utilizing resources. If AI becomes the preeminent intellectual force with regards to resources, history indicates that the longer standing feudal structures will prevail. When collective human intelligence is less than AI, humans’ value becomes more about the physical world than the intellectual one. (assuming robotics development lags further behind AI intellectual development) Again, Hollywood seems to have grasped the logical consequences of AI surpassing humans in terms of intellect and intelligence. Hollywood’s most prescient future AI concepts are by the time human beings realize they answer to the machines, and not the other way around, the options to rectify the situation left to humans will be as damaging to humans as to AI, if not worse.

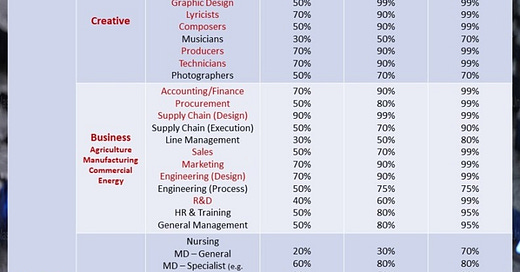

A Generational Outlook on AI Intrusion into Job Roles

As a thought exercise, I produced this table in an article over a year ago. What prompted the exercise was the repeated questions by certain clients as to how to leverage AI to solve every problem imaginable in business. This AI fervor motivated a broader investigation of the subject. The premises under which this list was created haven’t changed. Here are some of them:

· The ability of AI to create customized user specific entertainment will heavily impact creative spaces. (AI will allow people to watch movies where they’re the hero of every story.)

· Any area where there has already been widespread digitization of business data will be impacted first and the most (e.g. Finance and Accounting).

· Even in the engineering and science fields, the design functions will rapidly be dominated by AI because it will be able to do hundreds of virtual prototypes in the time humans could only produce one.

· Job roles dominated by memorization and pattern recognition will also be strong targets for displacement by AI.

Final Comments – Why is my opinion worth anything on this subject?

Perhaps there was enough detail in the article that resonates already with the reader. If not, a few things to consider. One of my primary skills is strategy. Within strategy for anything, there are two metaphorical limits typically considered either consciously or unconsciously:

1. “The Floor” – The worst or poorest possible outcome you can envision

2. “The Ceiling” – The best or most grandiose outcome you can envision

The key in developing strategies is understanding the potential for either to occur, the costs (to prevent the floor or achieve the ceiling), and the consequences to you and your organization. AI as a personal impact to someone’s profession, their cognitive skills, etc. would fall into a strategic approach called, “planning for the worst, and hoping for the best.” Why? Because, as outlined in the article, the downside risks for people, organizations, and professions could be altering history.

Since the invention of digital processing and computers, humans have been displaced in “thinking jobs”, but those displacements occurred because other humans created and programmed the replacements. That higher order thinking (e.g. development and programming) has remained exclusively a human thing, but that is likely coming to an end.